In a stunning confirmation that marks one of the most significant strategic partnerships in recent tech history, Apple officially announced today on CNBC that Google's Gemini AI will serve as the foundation for the next-generation version of Siri. This isn't just another software update—it represents a fundamental admission from Cupertino that the AI race has grown too complex for even the world's most valuable company to tackle alone.

The Official Confirmation

Apple's statement to CNBC's Jim Cramer was measured but telling: "After careful evaluation, we determined that Google's technology provides the most capable foundation for Apple Foundation Models and we're excited about the innovative new experiences it will unlock for our users."

Behind this corporate-speak lies a remarkable reality: Apple, a company famous for its "not invented here" philosophy, is paying its biggest rival approximately $1 billion annually to fix what has become its most embarrassing product failure. The revamped Siri is expected to launch with iOS 26.4 around March or April 2026, though some reports suggest a full rollout may not arrive until Fall 2026.

The Scale of Apple's AI Problem

To understand why this partnership matters, you need to understand just how badly Siri has fallen behind. The numbers are damning:

The Parameter Gap: Apple's current cloud-based Apple Intelligence model runs on just 150 billion parameters. Google's Gemini model that will power the new Siri? A staggering 1.2 trillion parameters—eight times more powerful. This isn't an incremental improvement; it's a complete transformation in capability.

The Accuracy Problem: Internal testing revealed that Siri lagged significantly behind competitors, with OpenAI's ChatGPT delivering 25% better accuracy. Reports suggest that in basic query tests, Siri achieved only 34% accuracy—a performance so poor that Apple employees allegedly nicknamed their AI group "AIMLess."

The User Experience Disaster: Ask anyone who uses Siri regularly, and you'll hear the same frustrations echoed across social media and tech forums. Users report that Siri frequently misunderstands basic commands, provides wrong information, favors bizarre music recommendations, and has somehow gotten worse over time rather than better. For a company that prides itself on user experience, this represents a systemic failure.

What Went Wrong Inside Apple

The most revealing aspect of this partnership is what it says about Apple's internal dysfunction around AI development. Multiple reports from Bloomberg and The Information paint a troubling picture:

Leadership Skepticism: Former AI chief John Giannandrea reportedly told employees after ChatGPT's 2022 launch that he didn't believe chatbots added much value for users—a catastrophic misread of the AI revolution that was about to unfold. This skepticism at the top trickled down, creating a culture that moved too slowly while competitors raced ahead.

Technical Chaos: Apple initially planned to build both small and large language models dubbed "Mini Mouse" and "Mighty Mouse." Then leadership pivoted to a single cloud-based model. Then they changed direction again. This indecision frustrated engineers and led to departures from the AI team. When they tried merging Siri's legacy code with new AI capabilities, the integration broke core features, creating what insiders described as a "buggy, unstable experience."

Misplaced Priorities: While competitors focused on transformative AI capabilities, Siri's leadership celebrated "small wins" like reducing response times and removing "Hey" from the "Hey Siri" wake phrase—a project that took over two years to complete. Meanwhile, fundamental improvements in accuracy and contextual understanding languished.

The Reality Check: When Apple finally got serious about AI in response to ChatGPT, internal tests showed their own models didn't perform nearly as well as OpenAI's technology. Apple managers even forbade engineers from using competing models in final products, only allowing them for benchmarking—effectively handicapping development while the competition surged ahead.

The Gemini Solution: Technical Details

Apple's partnership with Google represents a pragmatic solution to bridge the capability gap:

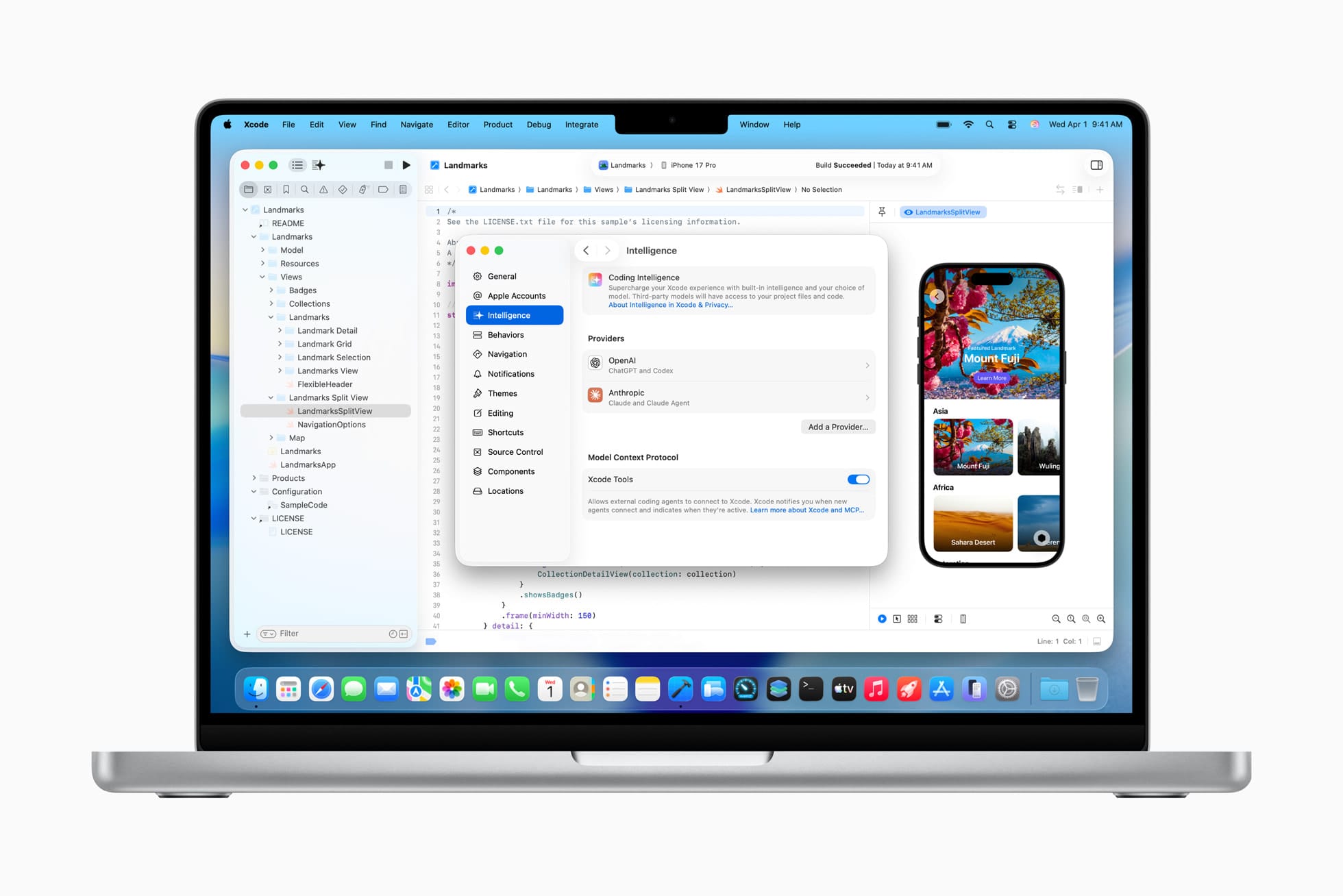

The Architecture: The new Siri will use a custom version of Gemini 3 Pro running on Apple's Private Cloud Compute (PCC) servers. This hybrid approach means lightweight, privacy-sensitive tasks remain on-device using Apple's silicon, while complex queries requiring world knowledge and advanced reasoning are offloaded to Google's model—but crucially, still processed on Apple-controlled servers.

Privacy Preservation: Apple has structured this deal to maintain its privacy principles. The Gemini models run on Apple's specialized servers, ensuring user data never touches Google's infrastructure and cannot be used for training Google's models. This "sandboxing" approach allows Apple to leverage Google's AI capabilities without compromising its core privacy commitments.

The Hybrid Model: Apple and Google haven't disclosed which specific features will use Gemini versus Apple's own models, but reports suggest Gemini will power the most complex Siri functions—query planning, knowledge search, multi-step task execution, and on-screen awareness. Apple's models will continue handling other Apple Intelligence features.

What This Means for Users

The March 2026 launch (assuming no further delays) promises several transformative capabilities:

Personal Context Awareness: The new Siri will finally understand your personal information across apps. Ask about your mother's flight information, and Siri will pull data from Mail and Messages to give you a complete answer—including her arrival time and lunch reservation plans.

On-Screen Awareness: Siri will see what's on your screen and provide contextual assistance, understanding not just what you're saying but what you're doing.

App Integration: Deep per-app controls will allow Siri to execute complex, multi-step tasks across multiple applications—something current Siri struggles with monumentally.

Conversational Intelligence: Rather than the rigid command-response pattern of current Siri, the new version should handle natural conversation with proper context retention and nuanced understanding.

Better Accuracy: With eight times the parameters and Google's proven AI architecture, basic tasks that currently fail should finally work reliably.

The Competitive Context

This partnership reveals fascinating dynamics in the AI industry:

The Irony: Apple already pays Google around $20 billion annually to be the default search engine on iOS devices. Now they're paying an additional $1 billion for AI capabilities. Money is flowing in both directions between these fierce rivals—a testament to how the AI era is forcing even competitors into strategic alliances.

Microsoft and OpenAI: While Microsoft initially appeared to have the inside track with ChatGPT integration in iOS 18, Google has emerged as Apple's primary "reasoning partner" for Siri's core transformation. This shifts the competitive balance significantly.

Temporary Solution: Apple is clear that this is a bridge, not a destination. The company is reportedly developing its own 1 trillion parameter model targeted for late 2026, potentially allowing them to transition away from Gemini when their in-house solution is ready. This mirrors Apple's historical pattern—relying on Intel until their own chips were ready, using Google Maps until Apple Maps launched, leaning on third-party services until proprietary solutions matured.

The China Challenge: Google services are banned in China, forcing Apple to pursue separate partnerships with Alibaba and Baidu for the Chinese market—adding complexity to an already complicated AI strategy.

The Broader Strategic Implications

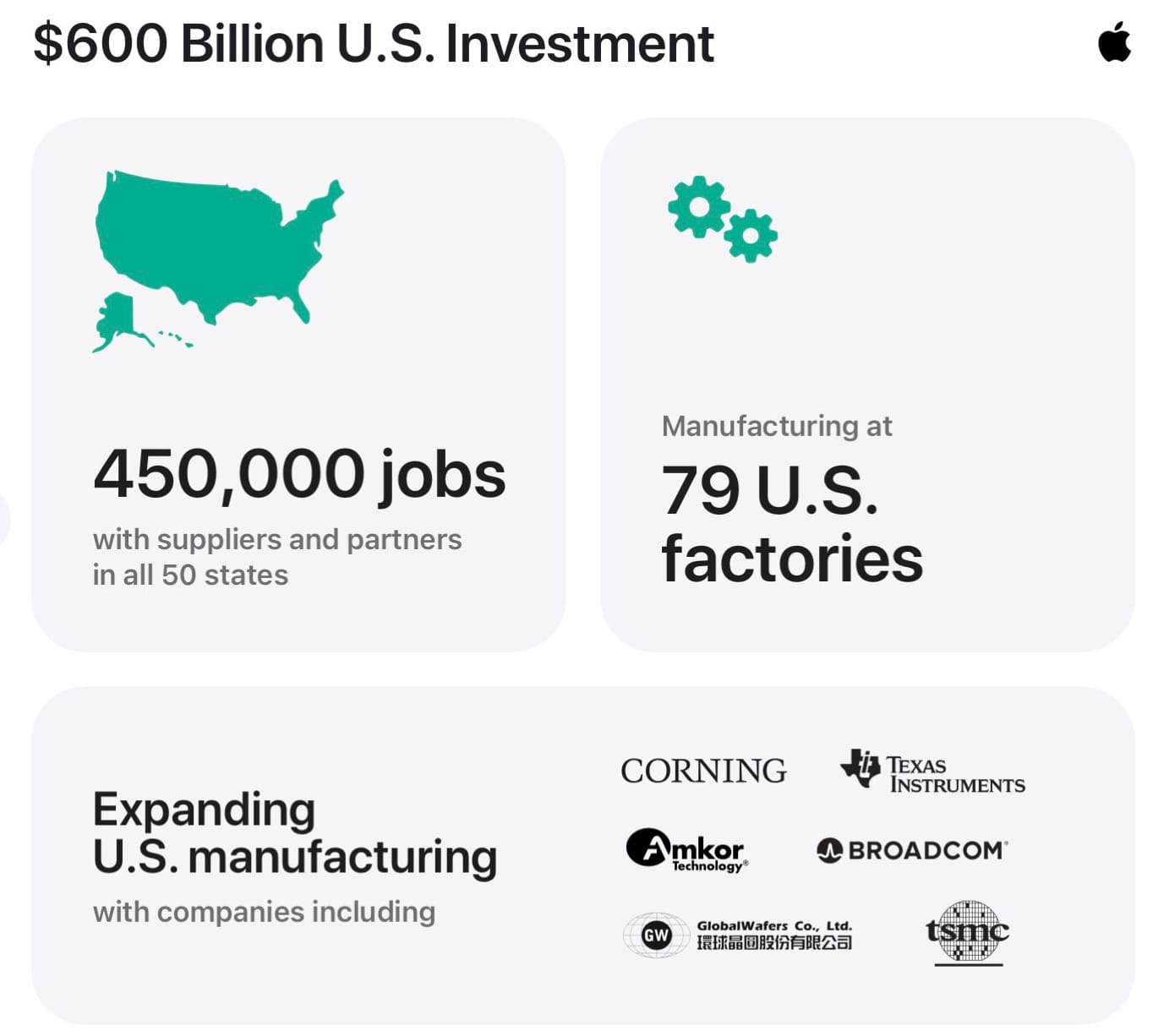

Apple's AI Ambitions: This partnership sits within Apple's broader "Apple Intelligence" initiative, which will span across devices including a new smart home display, updated Apple TV and HomePod mini, and expanded on-device intelligence across Messages, Notes, Mail, and Safari.

The WWDC 2026 Preview: Apple is expected to showcase the Gemini-powered Siri at WWDC 2026, positioning AI as the centerpiece of its software strategy. The stakes couldn't be higher—Apple needs to demonstrate that the wait and the partnership were worth it.

Third-Party Impact: If Apple successfully integrates deep cross-app automation into Siri, many third-party productivity tools could find their core value proposition subsumed by the operating system itself. Startups building "AI agents" should be nervous.

Brand and Perception: For years, Apple has positioned itself as the privacy-focused alternative to Google. Now they're using Google's AI brain while maintaining that their privacy architecture keeps user data safe. Whether consumers buy this narrative will be crucial to the partnership's success.

The Reality Check: Will It Work?

There are legitimate reasons for skepticism:

Apple's Track Record: Apple promised an upgraded Siri in iOS 18, then delayed it. Multiple times. The demo at WWDC 2024 showing Siri accessing emails for flight data and lunch plans? Reports suggest it was "effectively fictitious." Internal testers continue raising concerns about performance even with the Gemini integration.

The Integration Challenge: Balancing on-device speed with cloud-based intelligence while maintaining privacy guarantees represents a massive technical challenge. The complexity of this hybrid model is cited as a primary reason for the Fall 2026 delay.

User Expectations: Siri has disappointed users for so long that skepticism is warranted. The assistant will need to dramatically outperform current capabilities to win back trust.

Competitive Pressure: By the time this launches in 2026, Google Assistant, ChatGPT, and other AI agents will have continued advancing. Apple needs to not just catch up but leapfrog the competition—a tall order given their struggles to date.

The Bottom Line

Apple's confirmation of the Google Gemini partnership represents both a pragmatic solution and a sobering admission. The world's most valuable company, with $130 billion in cash reserves, couldn't build a competitive AI assistant on its own timeline. Rather than continue struggling alone, Apple made the hard choice to license technology from its biggest rival.

For users, this should be good news—if Apple can successfully integrate Gemini while maintaining privacy and delivering the promised capabilities. The eight-fold increase in model parameters, combined with Apple's expertise in user experience and ecosystem integration, could finally deliver the Siri we've been waiting over a decade to receive.

But the path from announcement to execution is littered with Apple's previous AI promises. The company has delayed, struggled, and disappointed repeatedly. Whether March 2026 brings redemption or another round of excuses will define not just Siri's future, but Apple's credibility in the AI era.

The partnership with Google is a calculated risk—a bridge to buy time while Apple builds its own capabilities. The question isn't whether Apple can eventually develop competitive AI models (they likely will). The question is whether users will still care by the time they do, or if the window of opportunity will have closed while Siri remained the punchline of too many jokes.

One thing is certain: Apple's AI strategy is no longer theoretical. With a billion-dollar-a-year commitment to Google and a March 2026 launch target, we're about to find out if the world's most valuable company can turn its most troubled product into its most impressive AI showcase.

What are your thoughts on Apple partnering with Google for Siri? Will you trust the privacy guarantees, or are you skeptical of this unlikely alliance? Let me know in the comments below or reach out on social media.

Discussion